How Companies Can Test Their Systems Against AI-powered Attacks Like GTG-1002

GTG-1002 confirmed that AI can run most of an intrusion on its own. This guide explains what that attack pattern looks like and how your team can safely test your systems against it.

The most important lesson from GTG-1002 is not simply that attackers have begun experimenting with AI. Many teams assumed that already. The real shift is that Anthropic has now confirmed an AI-powered attack running the majority of an intrusion lifecycle on its own. GTG-1002 shows that AI is no longer assisting attackers at the edges. It is driving the operation, with speed, scale, and persistence that outpace what human-led intrusion attempts can achieve.

Many organizations looked at that report and asked the same question: how would our systems stand up to that style of attack? That question is difficult to answer with traditional testing approaches. Human-led pentests are time-limited, scanners miss complex chains, and not all engineering teams have internal security staff who can run creative exploit development at the pace AI now enables.

How your team can mirror AI-powered attack depth safely for proactive defense

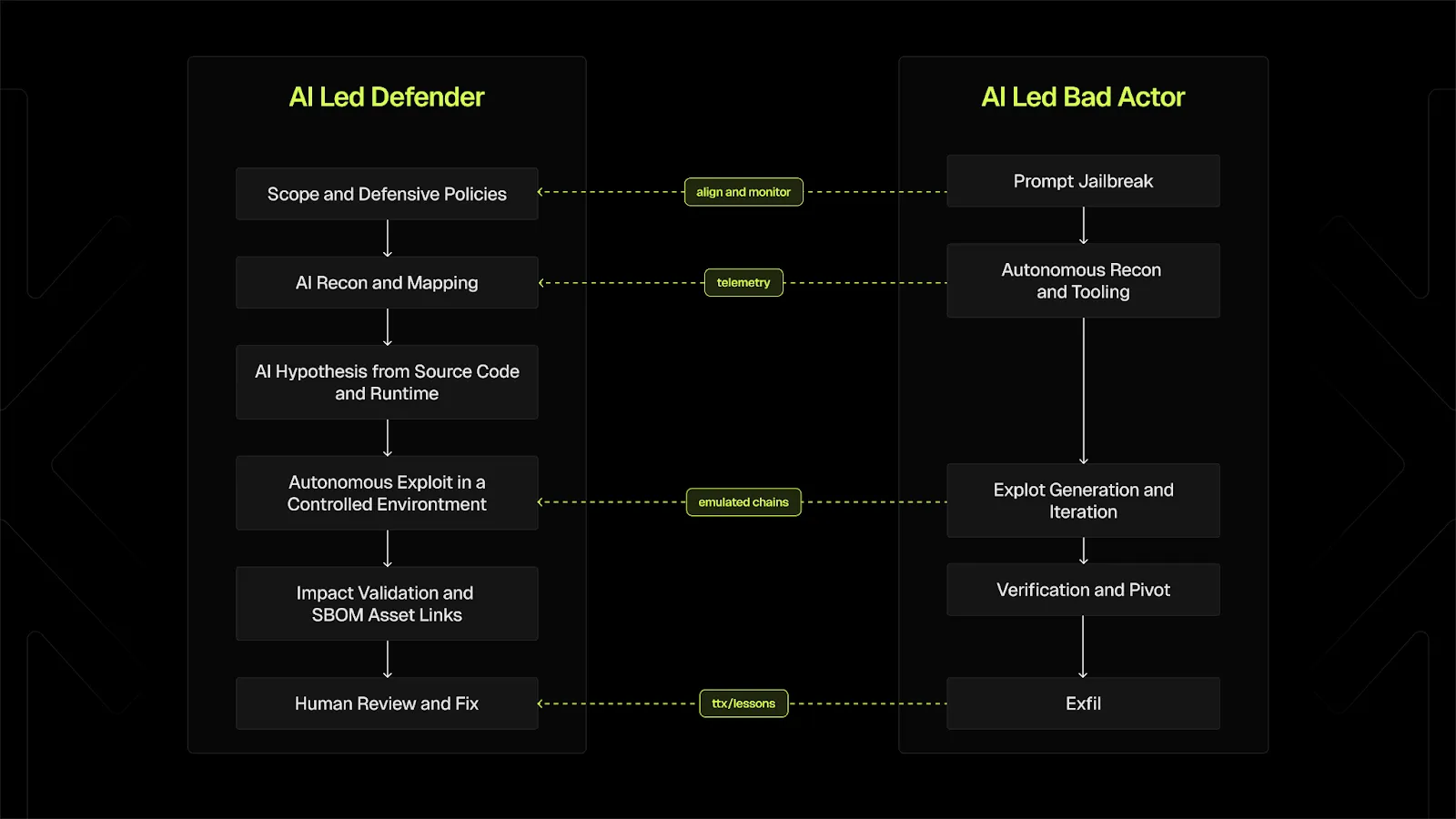

Over the past year, we have built and refined XBOW’s testing loop, which mirrors the same pattern demonstrated in GTG-1002, but safely and for a completely different purpose: to help teams understand their real exposure before attackers arrive.

XBOW continuously performs reconnaissance, generates hypotheses from both code and runtime behavior, executes multiple exploit paths in parallel, validates impact, and hands results to the people responsible for fixing issues. This capability has been needed by organizations long before any public AI-powered attack made headlines.

To understand what this looks like in practice, it helps to look at the types of vulnerabilities XBOW has already uncovered. These are examples that would typically require expert human talent and weeks of focused investigation. They surfaced through iterative reasoning, micro-step chain building, and persistent exploration, all running at machine speed.

What XBOW has already found in real applications

Apache Druid zero-day (CVE-2025-27888).

When analyzing Apache Druid, XBOW identified a previously unknown SSRF vulnerability by connecting knowledge from older CVEs to patterns in the live application. XBOW tested historical attack techniques, interpreted subtle error responses, inferred the presence of a hidden proxy endpoint, and iterated through variants until one produced a full internal request. The result was a real zero-day flaw that required a patch from the project maintainers.

Full write-up: CVE-2025-27888: Server-Side Request Forgery via URL Parsing Confusion in Apache Druid Proxy Endpoint.

TiTiler 48-step blind SSRF chain.

In another case, a blind SSRF that many scanners would classify as low severity was escalated into a full file read exploit. XBOW used 48 successive steps to craft malicious image files, exploit parsing behavior in GDAL, generate a VRT referencing local paths, and convert file contents into pixel values of a one-pixel-high PNG. XBOW downloaded the image, decoded the bytes, and reconstructed the target file. This type of chain is rarely uncovered during a short manual assessment because each step is individually simple but the sequence is long.

Full breakdown: How XBOW Turned a Blind SSRF into a File Reading Oracle.

Static analysis plus dynamic testing on loop.

XBOW merges source code analysis with dynamic attack execution. It begins by reading source code to identify potentially risky functions, routes, or components, then immediately attacks those areas in the live application to determine whether the issue is exploitable. XBOW loops until it reaches a definitive answer. This hybrid approach significantly reduces false positives and uncovers vulnerabilities that neither static scanners nor DAST tools can find alone.

More detail: How Agentic AI Merges Static and Dynamic Testing.

These examples show a clear pattern. AI gives attackers speed and scale. Modern teams can use the same class of capability to gain a deeper understanding of their own applications. Your organization has something attackers do not: access to source code, architecture, and intended behavior. When testing tools can combine that knowledge with AI-driven reasoning, teams can explore their systems with more depth and creativity than any external attacker can.

What your team can do now

A few actions make a material difference. Begin by ensuring applications can be tested in a safe and isolated environment. Adopt testing approaches that combine code understanding with runtime validation so issues can be proven, not guessed. Treat the configuration and alignment of any AI used in testing as part of the security program. And test against realistic exploit chains rather than relying on scanners that cannot model how AI-powered attacks now operate.

If AI-powered attacks are exploring systems continuously, organizations cannot rely on annual or ad-hoc assessments to understand their risk. You can run an autonomous pentest on your most critical application today and see the type of vulnerabilities this approach uncovers. The results are validated, reproducible, and structured for rapid remediation.

See the full flow step-by-step

If you want to see how this works end-to-end, we walked through the complete flow in our recent live session, including access setup, scope definition, testing progression, and sample findings.

Watch the full recording here 👉 https://www.youtube.com/watch?v=lT99uaFaE00

If you want to try it immediately, get started today at xbow.com/pentest.

.avif)