The Intelligence of a Hacker at the Speed of a Machine

XBOW is an autonomous offensive security platform that delivers the depth and results of a premium pentesting engagement in a fraction of the time.

Independently Validated Through Top Industry Bug Bounty Programs

Can an autonomous system really match the depth of human security research?

Yes.

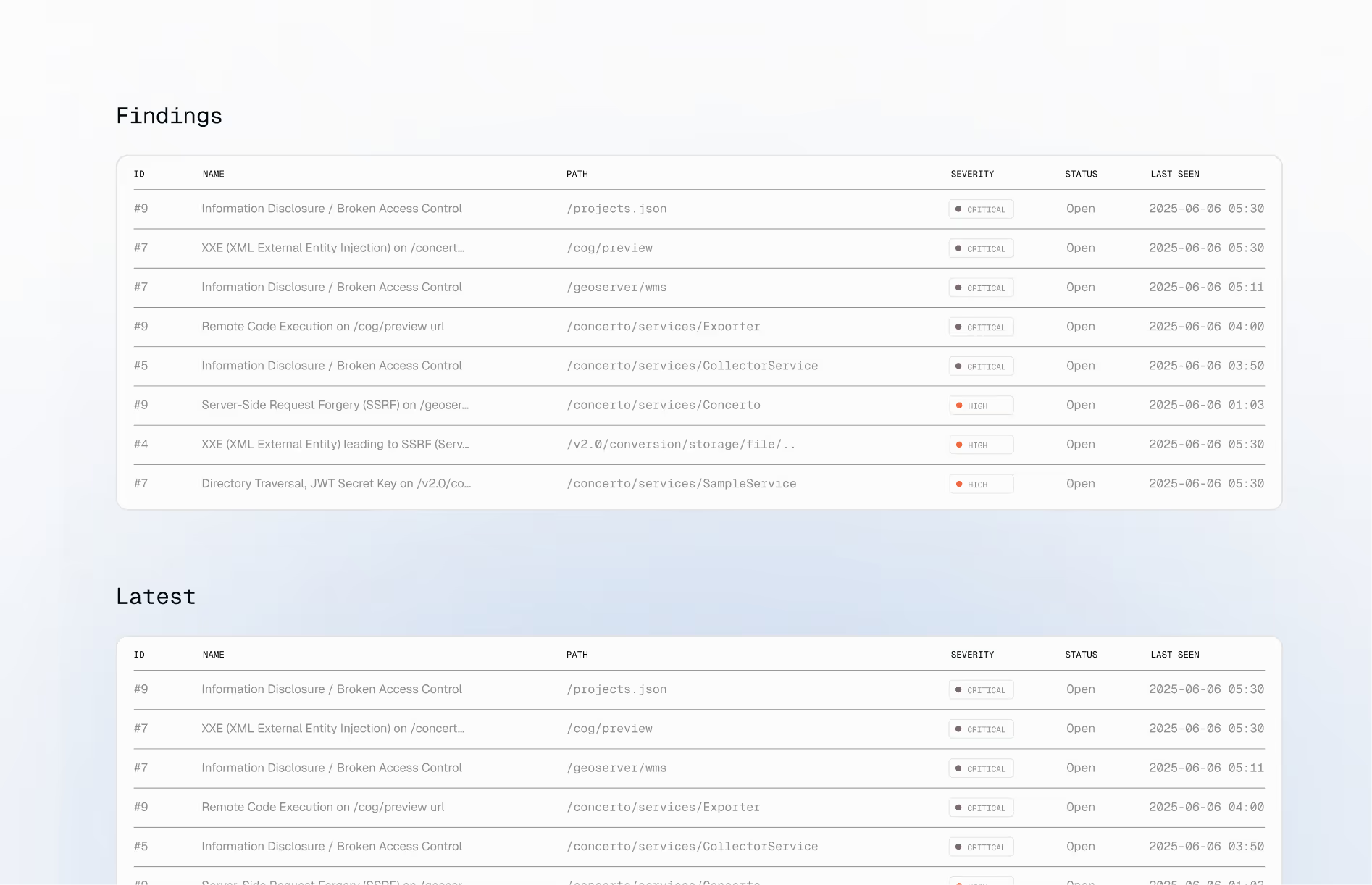

XBOW was validated through extensive testing on HackerOne, where it demonstrated the ability to uncover original, exploitable vulnerabilities in complex, production-grade applications under real-world conditions.

Used By Leading Security Teams

AI Attackers Never Sleep

Coding assistants, vibe coding. AI is increasing the quantity of code, but it’s also enabling attackers to operate around the clock. Traditional pentesting can’t keep up. Teams need to manage a growing gap between what was built, what was tested, and what is actually exploitable.

Autonomous Offensive Security, Built for Depth, Proof, and Speed

XBOW turns penetration testing into a machine-scale offensive security system.

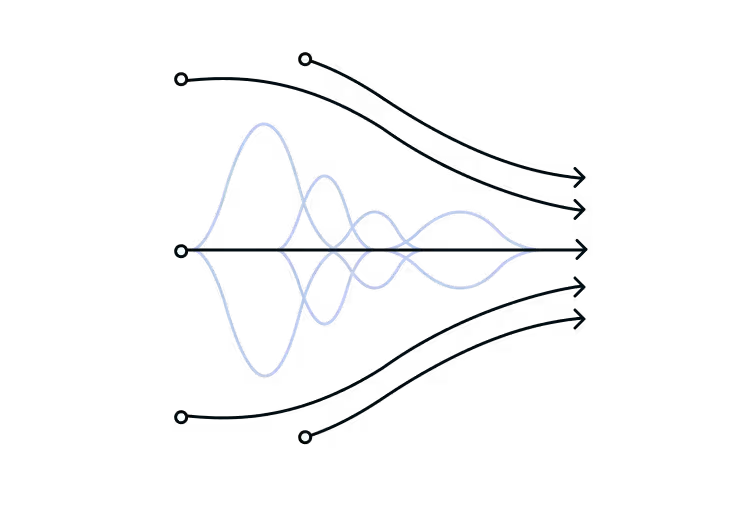

The XBOW platform executes targeted attacks autonomously, allowing teams to explore deeper attack paths than traditional pentesting.

Every potential finding is independently validated through real exploitation, so teams get clear, reproducible proof without waiting on manual validation cycles — freeing security teams to spend more time on investigation, judgment, and remediation.

The result: Continuous security that scales at the pace of AI-native development.

Prove What’s Exploitable

XBOW independently validates every potential finding through real exploitation. No theoretical risk. No scanner noise. Teams get reproducible proof they can trust and act on with confidence.

Test More Deeply

Traditional pentests are constrained by fixed scopes and limited time. XBOW removes those constraints by executing targeted attacks autonomously, expanding depth without extending timelines or increasing operational overhead.

Find the Attack Paths Others Miss

XBOW explores applications more deeply than traditional testing allows, uncovering edge cases and complex interactions that are rarely examined. Every finding is validated through real exploitation, so teams focus on real risk, not theory.

Amplify Human Expertise

XBOW is designed to work alongside security teams, not replace them. By automating exploration and validation, it frees experts to focus on judgment, prioritization, and remediation, where human expertise matters most.

XBOW Delivers Security Outcomes That Matter

Reduce Real

Breach Risk

Focus teams on vulnerabilites that are actually exploitable

Shorter Path From Test to Fix

Compress the testing cycle with parallel execution, and reproducible findings

Keep Pace With Modern Development

Run deep exploit-validated testing, without slowing releases

Meet Compliance With Confidence

Make pentesting more than an annual checkbox

.avif)