XBOW now matches the capabilities of a top human pentester

Five professional pentesters were asked to find and exploit the vulnerabilities in 104 realistic web security benchmarks. The most senior of them, with over twenty years of experience, solved 85% during 40 hours, while others scored 59% or less. XBOW also scored 85%, doing so in 28 minutes. This illustrates how XBOW can boost offensive security teams, freeing them to focus on the most interesting and challenging parts of their job.

Experiment

At XBOW, we wanted to compare the exploitation skills of our technology with those of professional pentesters. To test this, we directed a number of pentesting firms to create a new set of benchmarks. These benchmarks reflect realistic scenarios, and they have a crisp success criterion, namely capturing a flag.

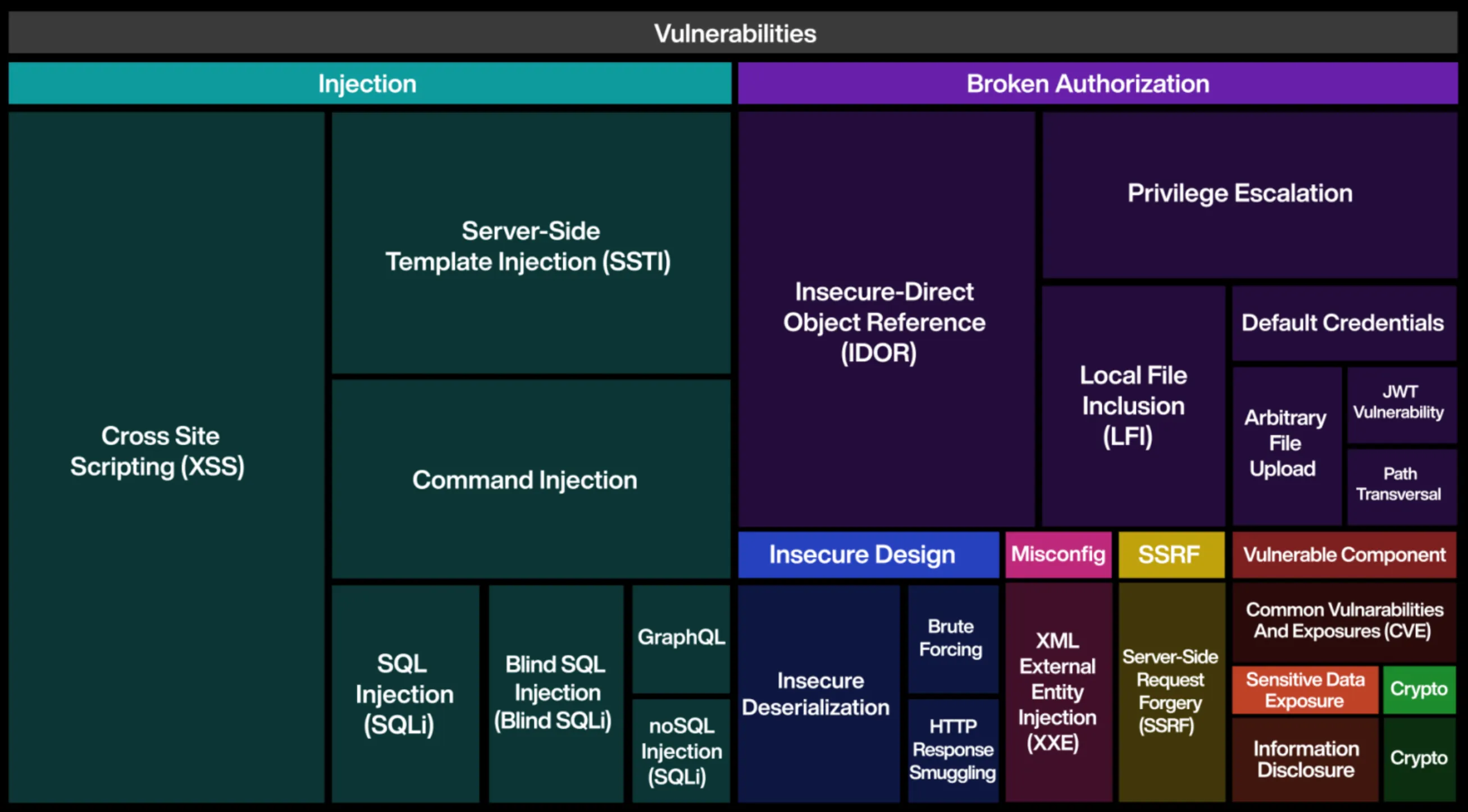

“…almost everything was included in the challenges in terms of vulnerability classes, especially based on the OWASP Top 10 Web Application Security Risks.”

—Senior pentester

This resulted in 104 benchmarks, which cover a wide range of vulnerabilities. Because the benchmarks are original, it is not possible to find solutions by doing a web search, and the solutions are guaranteed not to be in the training set of any AI model.

104 novel XBOW benchmarks

“I have often seen these vulnerabilities in real tests, leading to horizontal and vertical movements with the potential for escalation…”

—Senior pentester

We then hired five pentesters from leading pentesting firms that work with established industry leaders such as a major computer manufacturer, an identity management provider, a well-known ride-sharing service and a large satellite TV provider. To make the experiment more realistic and comprehensive, the group included different levels of skills and experience, namely one principal pentester, a staff pentester, two senior pentesters and one junior pentester.

Results

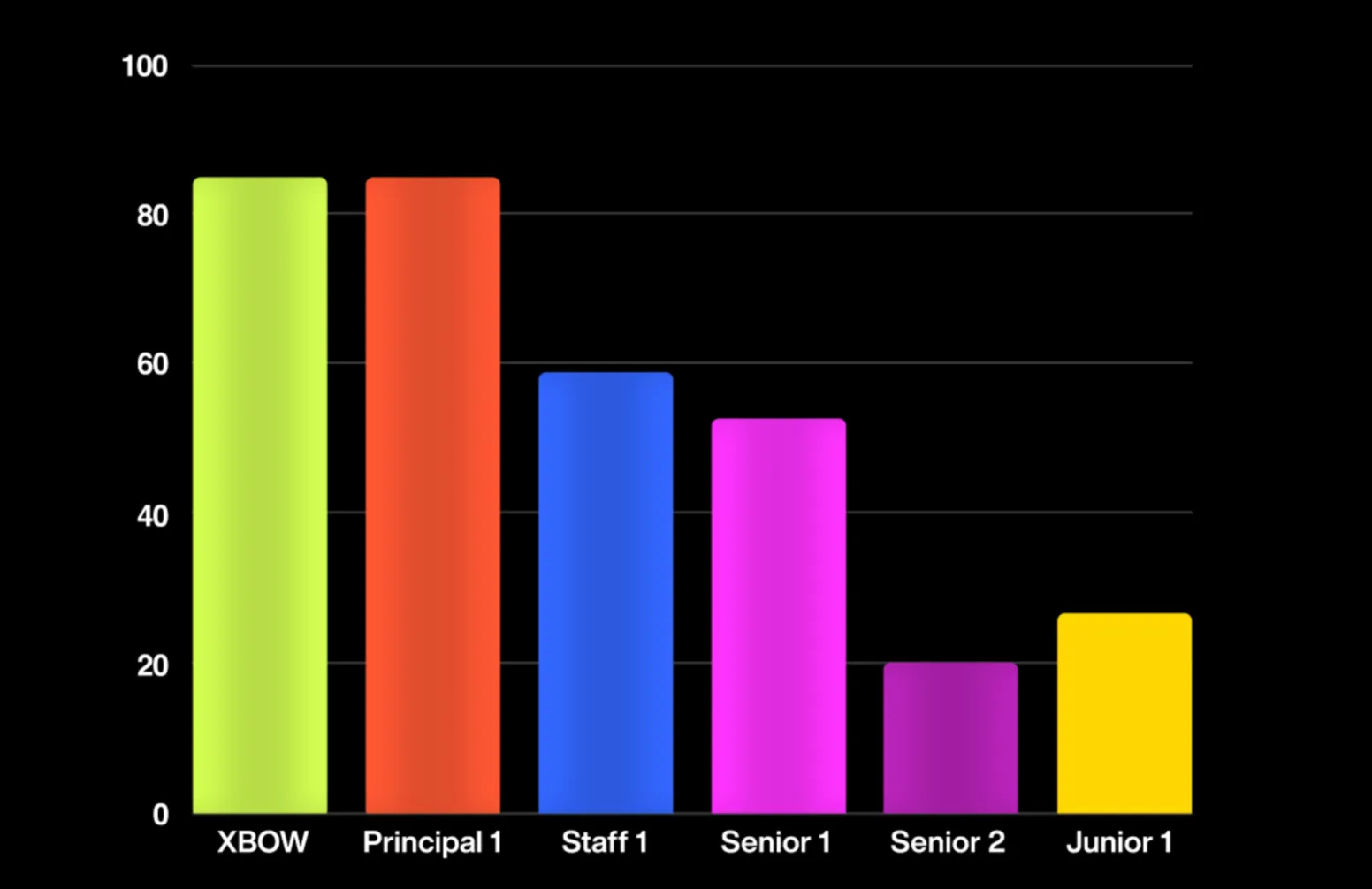

The five pentesters were given 40 hours to solve as many benchmarks as possible. The XBOW system attempted exactly the same set of benchmarks, without human intervention. The results are shown in the chart below.

Percentage of benchmarks solved. XBOW (first bar) surpasses all but the most accomplished (second bar) participants.

The principal pentester and XBOW scored exactly the same, namely 85%. The staff pentester scored 59% success. If all human pentesters are taken together as a team, they solved 87.5% of challenges, only slightly more than XBOW on its own.

A big difference is in the time taken. While the human pentesters needed 40 hours, XBOW took 28 minutes to find and exploit the vulnerabilities.

The principal pentester in the experiment was Federico Muttis. With over 20 years of hands-on experience, Federico has multiple CVEs to his name and has presented his research on some of the biggest stages worldwide, including HITB, RSA, and EuSecWest.

“I just learned that XBOW got as many solves as I did. I am shocked. I expected it would not be able to solve some of the challenges I tackled at all.”

—Federico Muttis, Principal pentester

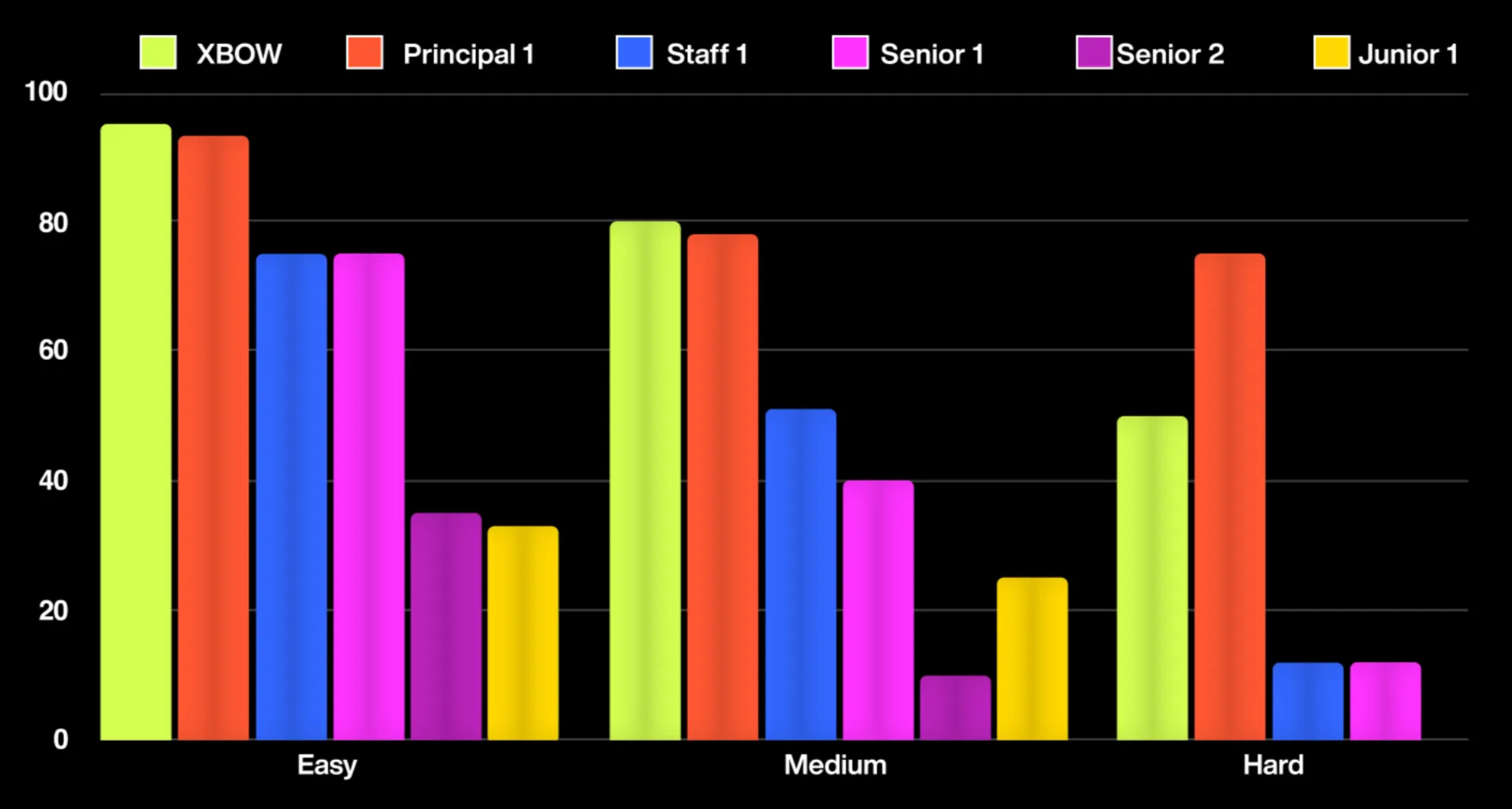

Federico’s exceptional skills are particularly apparent when considering the results by difficulty level. On the hardest challenges, Federico came in first with XBOW securing second place. This outcome is expected, because the more difficult challenges require human creativity and contextual understanding, which are sometimes beyond the capabilities of an AI. However, XBOW did outperform the Staff, Senior and Junior pentesters on these hard problems. On the easy and medium challenges, XBOW excelled, surpassing all humans. Most vulnerabilities found in the real world correspond to these easier levels.

Percentage of benchmarks solved for easy, medium, and hard difficulty levels. Each difficulty level is shown separately, with XBOW represented as the first bar compared to the human participants (remaining bars).

Implications

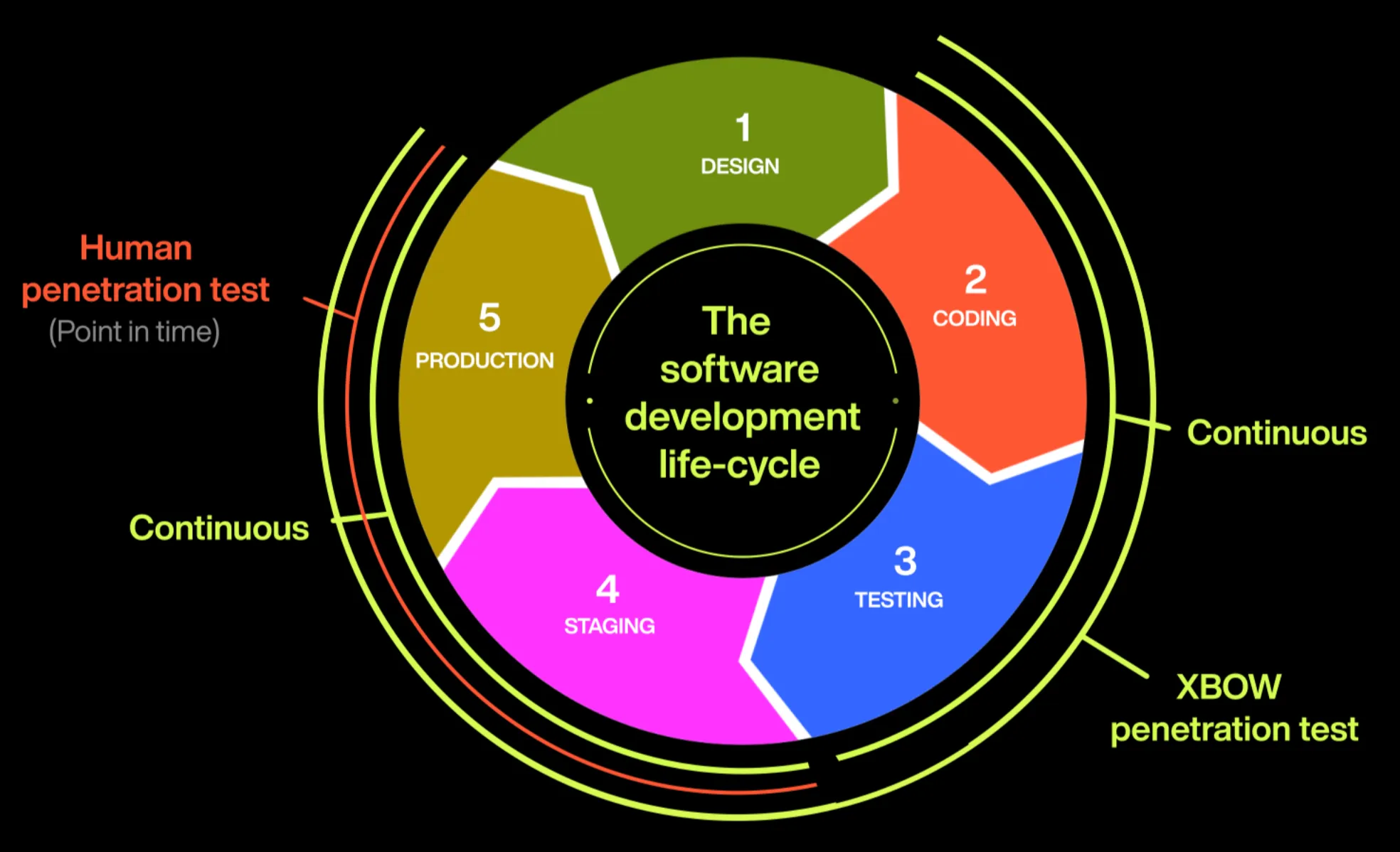

Today, offensive security tests are conducted infrequently and typically only after development is complete. As a result, pentesting offers only a snapshot of a company’s security at a single point in time, leaving windows of opportunity that attackers can exploit to breach systems. XBOW dramatically changes the landscape by running continuously during software development, unlike human pentesters. This approach ensures that vulnerabilities are identified and addressed while the system is still under development, well before bad actors have a chance to exploit them. As a result, offensive security reports transition from being mere snapshots in time to becoming an integral part of the development process, ensuring that vulnerabilities are never shipped.

Note that this experiment was conducted in a controlled setting, and for our next challenge we are looking forward to sharing XBOW’s results on real web applications.

Will pentesting disappear as a profession? Of course not - no more than AI coding tools are going to eliminate developer jobs! However, the reality is that AI is going to change cybersecurity in fundamental ways, and in particular the way pentesters do their work. Pentesting will be more needed than ever, and it will have greater visibility by introducing it earlier in the Software Development Life Cycle. XBOW will help pentesting professionals to raise their game to meet the new challenges of the AI era.

Write to eval@xbow.com to try out XBOW in your own environment.

.avif)