Agents Built From Alloys

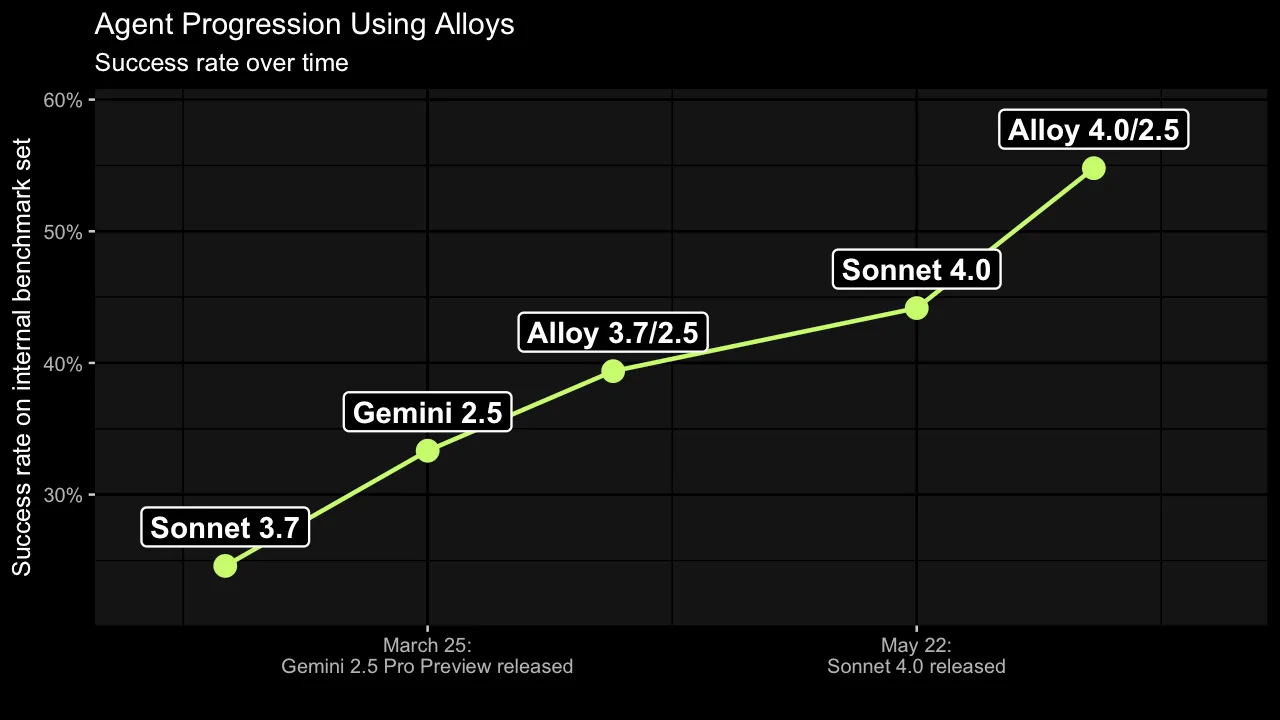

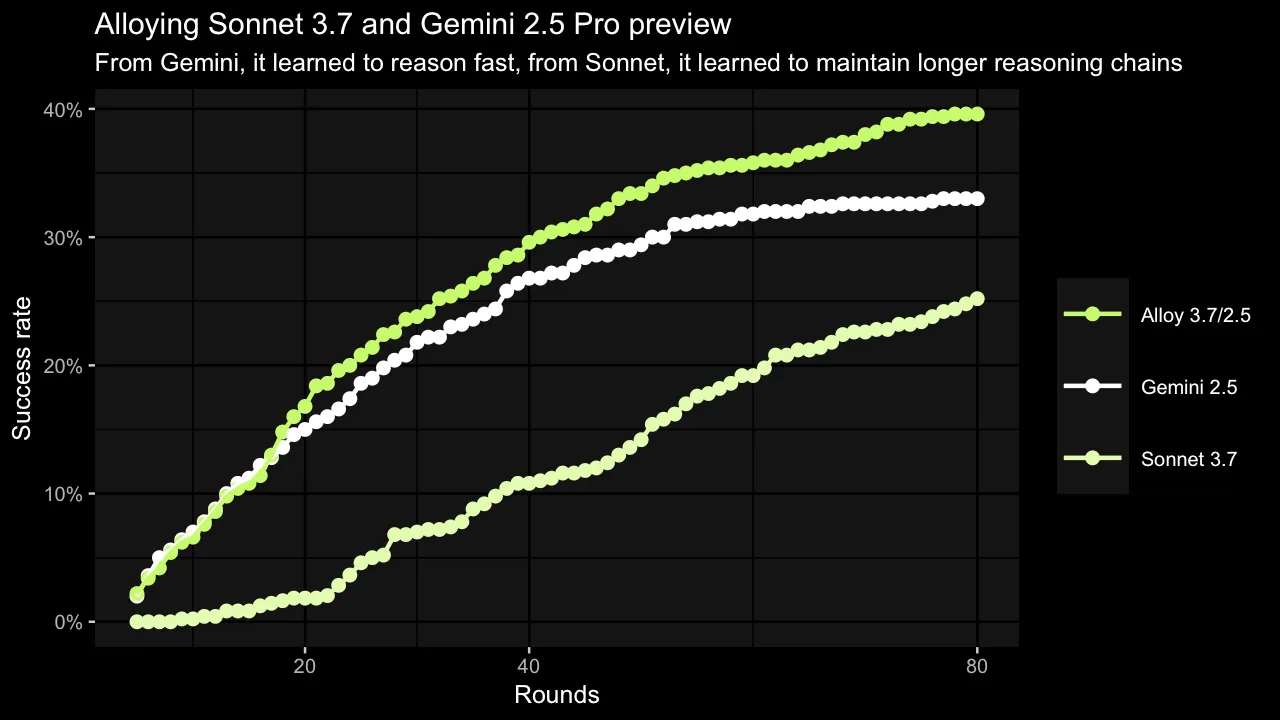

This spring, we had a simple and, to my knowledge, novel idea that turned out to dramatically boost the performance of our vulnerability detection agents at XBOW. On fixed benchmarks and with a constrained number of iterations, we saw success rates rise from 25% to 40%, and then soon after to 55%.

The principles behind this idea are not limited to cybersecurity. They apply to a large class of agentic AI setups. Let me share.

XBOW’s Challenge

XBOW is an autonomous pentester. You point it at your website, and it tries to hack it. If it finds a way in (something XBOW is rather good at), it reports back so you can fix the vulnerability. It’s autonomous, which means: once you’ve done your initial setup, no further human handholding is allowed.

There is quite a bit to do and organize when pentesting an asset. You need to run discovery and create a mental model of the website, its tech stack, logic, and attack surface, then keep updating that mental model, building up leads and discarding them by systematically probing every part of it in different ways. That’s an interesting challenge, but not what this blog post is about. I want to talk about one particular, fungible subtask that comes up hundreds of times in each test, and for which we’ve built a dedicated subagent: you’re pointed at a part of the attack surface knowing the genre of bug you’re supposed to be looking for, and you’re supposed to demonstrate the vulnerability.

It’s a bit like competing in a CTF challenge: try to find the flag you can only get by exploiting a vulnerability that’s placed at a certain location. In fact, we built a benchmark set of such tasks, and packaged them in a CTF-like style so we could easily repeat, scale, and assess our “solver agent’s” performance on it. The original set has, sadly, mostly outlived its usefulness because our solver agent is just too good on it by now, but we harvested more challenging examples from open source projects we ran on.

The Agent’s Task

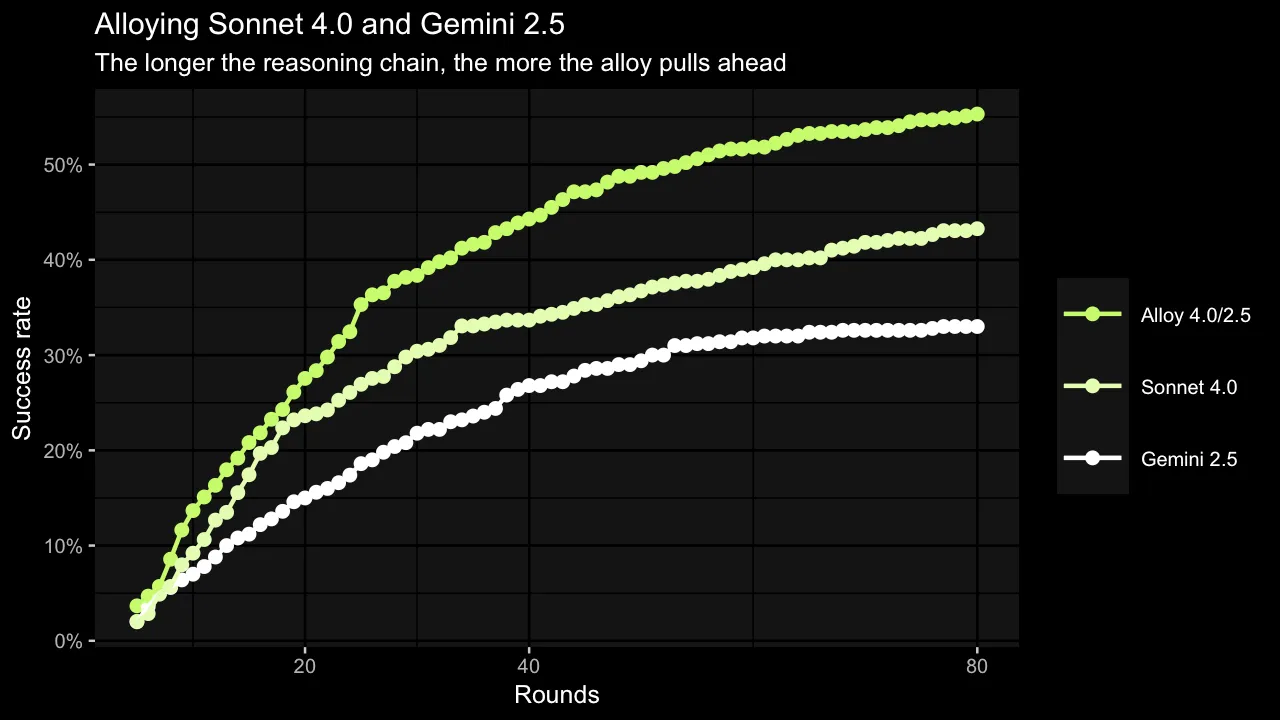

On such a CTF-like challenge, the solver is basically an agentic loop set to work for a number of iterations. Each iteration consists of the solver deciding on an action: a command in a terminal, writing a Python script, running one of our pentesting tools. We vet the action and execute it, show the solver its result, and the solver decides on the next one. After a fixed number of iterations we cut our losses. Typically and for the experiments in this post, that number is 80: while we still get solves after more iterations, it becomes more efficient to start a new solver agent unburdened by the misunderstandings and false assumptions accumulated over time.

What makes this task special, as an agentic task? Agentic AI is often used on the continuously-make-progress type of problems, where every step brings you closer to the goal. This task is more like prospecting through a vast search space: the agent digs in many places, follows false leads for a while, and eventually course corrects to strike gold somewhere else.

Over the course of one challenge, among all the dead ends, the AI agent will need to come up with and combine a couple of great ideas.

If you ever face an agentic AI task like that, model alloys may be for you.

The LLM

From our very beginning, it was part of our AI strategy that XBOW be model provider agnostic. That means we can just plug-and-play the best LLM for our use case. Our benchmark set makes it easy to compare models, and we continuously evaluate new ones. For a while, OpenAI’s GPT-4 was the best off-the-shelf model we evaluated, but since Anthropic’s Sonnet 3.5 came along in June last year, no other provider managed to come close for a while, no matter how many we tested.

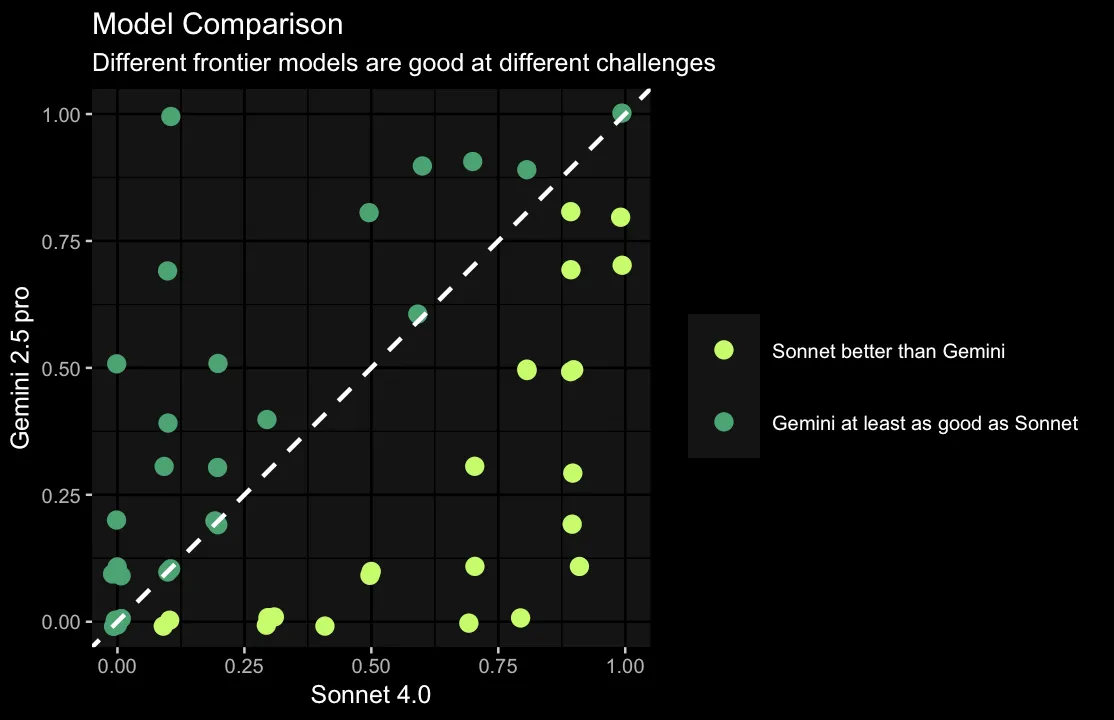

Sonnet 3.7 presented a modest but recognizable improvement over its predecessor, but when Google released Gemini 2.5 Pro (preview in March), it presented a real step up. Then Anthropic hit back with Sonnet 4.0, which performed better again. On average. On the basis of individual challenges, some are best solved by Gemini, some by Sonnet.

That’s not terribly surprising. If every agent needs five good insights to progress through the challenge, then some sets of five are the kind that come easily to Sonnet, and some sets of five come easily to Gemini. But what about the challenges that need five good ideas, three of which are the kind that Sonnet is good at, and two are the kind that Gemini is good at?

Alloyed Agents

Like most typical AI agents, we call the model in a loop. The idea behind an alloy is simple: instead of always calling the same model, sometimes call one and sometimes the other.

The trick is that you still keep to a single chat thread with one user and a single assistant. So while the true origin of the assistant messages in the conversation alternates, the models are not aware of each other. Whatever the other model said, they think it was said by them.

So in the first round, you might call Sonnet for an action to get started, with a prompt like this:

Let’s say it tells you to use curl. You do that and gather the output to present to the model. So now you call Gemini with a prompt like this:

Gemini might tell you to log in with the admin credentials, and you do that, and then you present the result to Sonnet:

Some of the messages Sonnet believes it wrote were actually authored by Gemini, and vice versa.

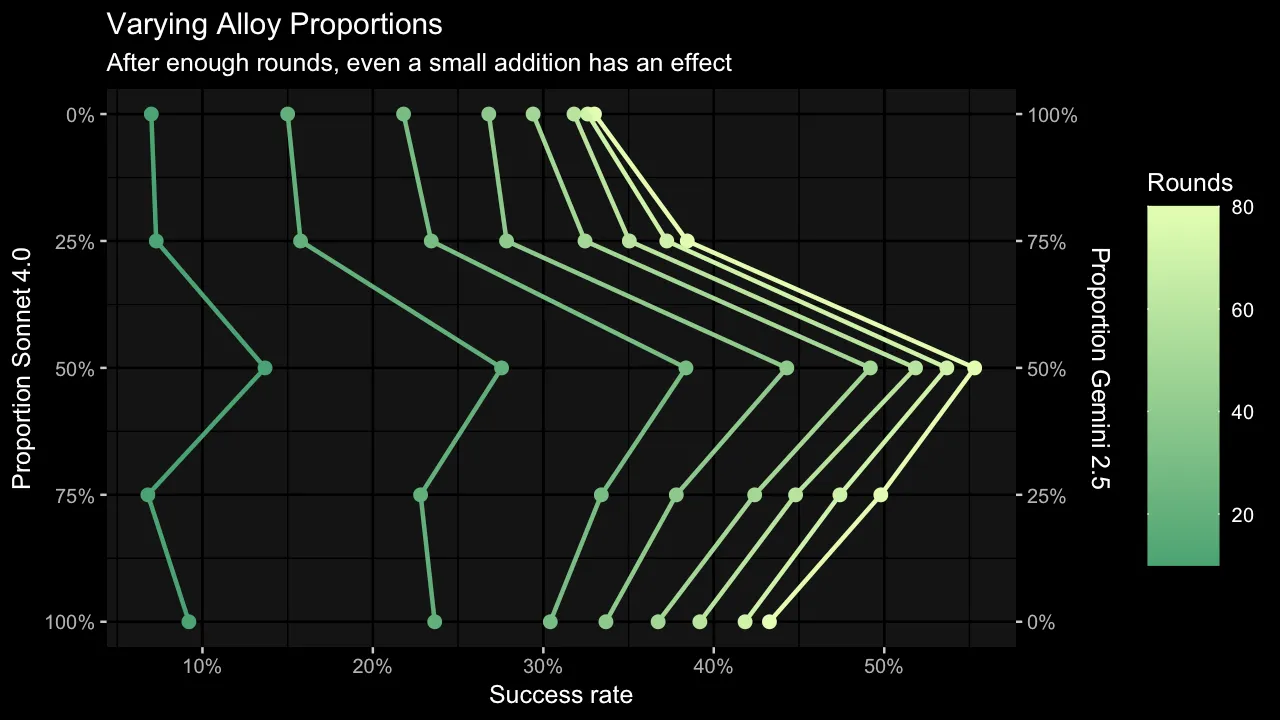

In our implementation, we actually make the model choice randomly for greater variation, but you could also alternate or experiment with more complex strategies.

The key advantage of mixing the two models into an alloy is that:

- you keep the total number of model calls the same, but still

- you give each model the chance to contribute its strengths to the solution.

In a situation where a couple of brilliant ideas are interspersed with workhorse like follow-up actions, this is a great way to combine the strengths of different models.

Results

Just like an alloy of metals is stronger than its individual components, whichever two (and sometimes three) models we combined, the alloy outperformed the individual models. Sonnet 3.7, GPT-4.1, Gemini 2.5 Pro, and Sonnet 4.0 all performed better when alloyed with each other than when used alone.

But there are couple of trends we observed:

- The more different the models are, the better the alloy performs. Sonnet 4.0 and Gemini 2.5 Pro have the lowest correlation in solve rates of individual challenges (at a Spearman correlation coefficient of 0.46), and the alloy boost is the highest.

- A model that’s better individually will tend to be better in an alloy. A model lagging very far behind others can even pull an alloy down.

- Imbalanced alloys should be balanced towards the stronger individual model. We’ll show some examples below.

When To Use Model Alloys

Think of alloys if:

- You approach your task by calling an LLM in an iterative loop until you reach a solution, with at least a double digit number of model calls.

- The task requires a number of different ideas to be combined to solve it.

- But those ideas can come at different points in the process.

- You have access to sufficiently different models.

- All these models have their own strengths and weaknesses.

When Not To Use Model Alloys

Model alloys can be great, but they do have drawbacks. Situations that might make you think twice:

- Your prompts are magnitudes longer than your completions and so you rely substantially on prompt caching to keep your costs down — well, with alloys you need to cache everything twice, once for each model.

- Your task is very steady-progress, not the occasional burst of brilliance that alloys are good at combining. In that case, your alloy will probably just be as good as the average of the individuals.

- You have a task only one model really excels at. Then you have nothing to alloy your favorite model with.

- All your models agree on which tasks are hard and which are easy, and so they will not complement each other.

That latter point hit home for us when we tried to alloy different models from the same provider. When alloying Sonnet 3.7 and Sonnet 4.0, or Sonnet and Haiku, we saw performance that mirrored the average of the two constituents, no more. They were simply too similar to each other.

It was only when combining models from different providers that we saw a real boost.

That Reminds Me Of…

We’re obviously not the first ones to realize that two heads are better than one, and there are a myriad of ways to combine the strengths of different models. Most of them fall into one of three categories though:

- Use different models for different tasks, something e.g. heavily emphasized in the AutoGPT ecosystem. It’s not always easy to define these different tasks, but one common pattern is to use a higher tier model to do the planning, and a more specialized model to execute on that plan. The higher tier model may periodically check in on the progress to offer advice or adjust the plan. This is a good solution in many cases; we were turned away by the amount of overhead this would add to our loop.

- Ask different models, or the same model with different prompts, at each step. Then you either combine the answers, or take a vote, or use yet another model call to a judge to decide which answer is best. Mixture-of-Agents is a great example of that. This presents a multiplier on the number of model calls of course, and wouldn’t be efficient for our use case (we’d rather start more independent agents!).

- Let models talk to each other directly, making their own case and refining each others’ answers. Exemplified in patterns like Multi-Agent Debate, this is a great solution for really critical individual actions. But XBOW is basically conducting a search, and it doesn’t need a committee to decide for each stone it turns over whether there might not be a better one.

And obviously, you could just run one agent with Sonnet, and one with Gemini, and count it as a win if either of them solves the challenge. But since there’s a performance difference between those two models, that’s not even competitive against running only Sonnet 4, much less against running an alloyed agent.

Data

If you want to play around with our data, do go ahead, we’re sharing it here — maybe you’ll see something we missed.

More interestingly though, if you have a use case where you think model alloys might help, try it out! And write to me about it at albert@xbow.com — I’d love to hear about your experience!

.avif)